bagging predictors. machine learning

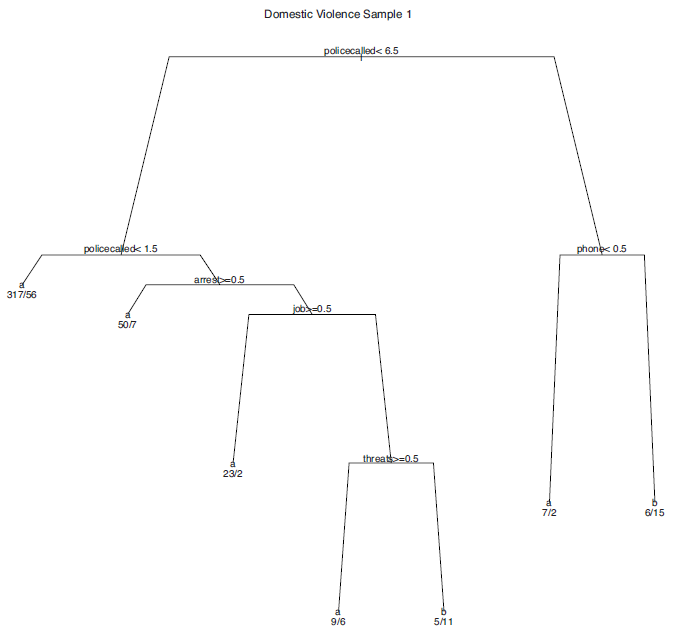

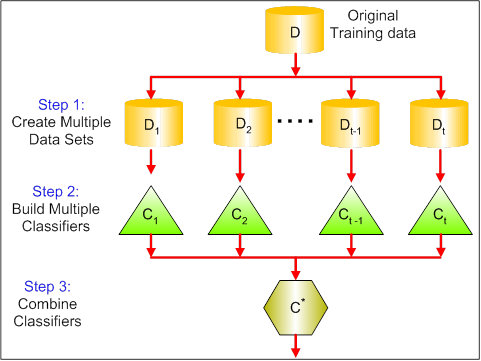

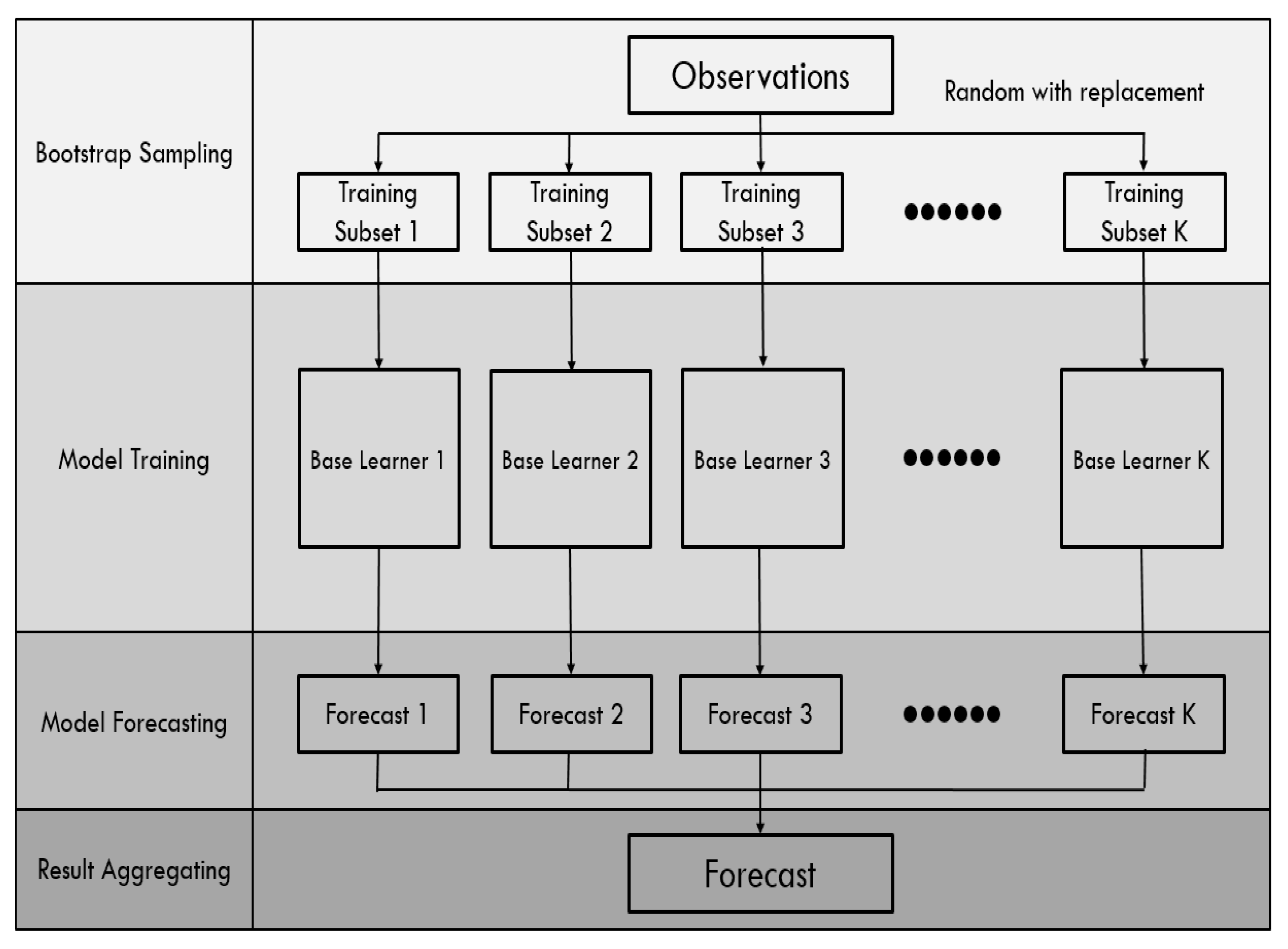

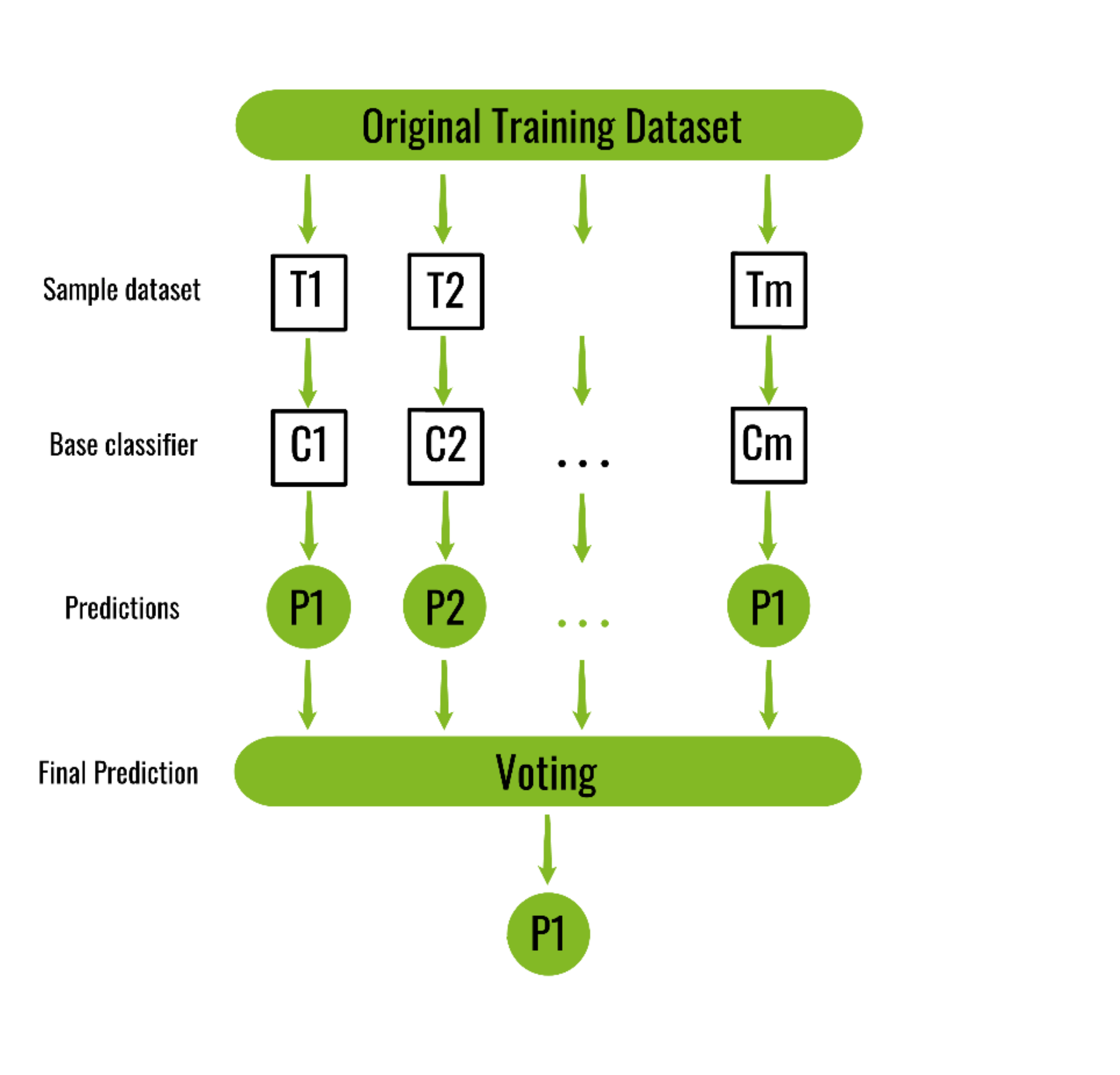

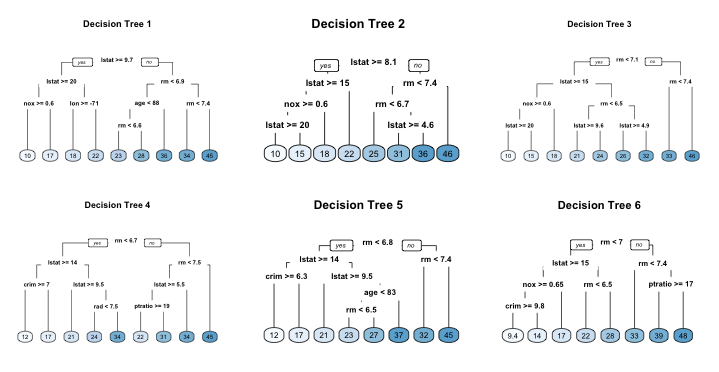

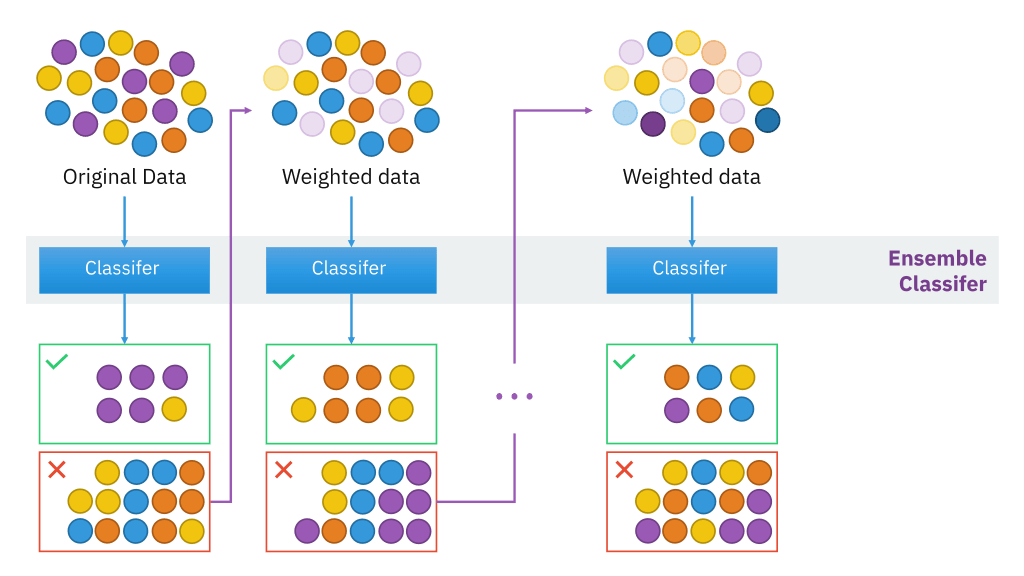

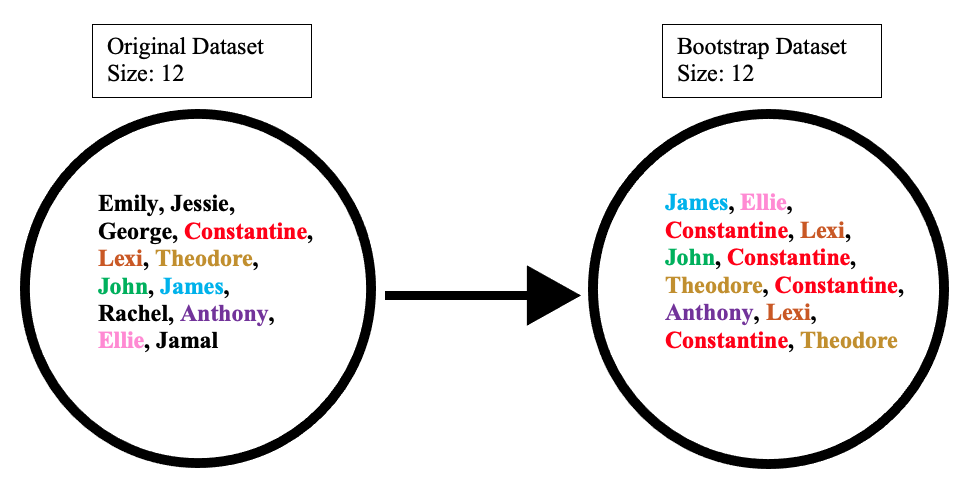

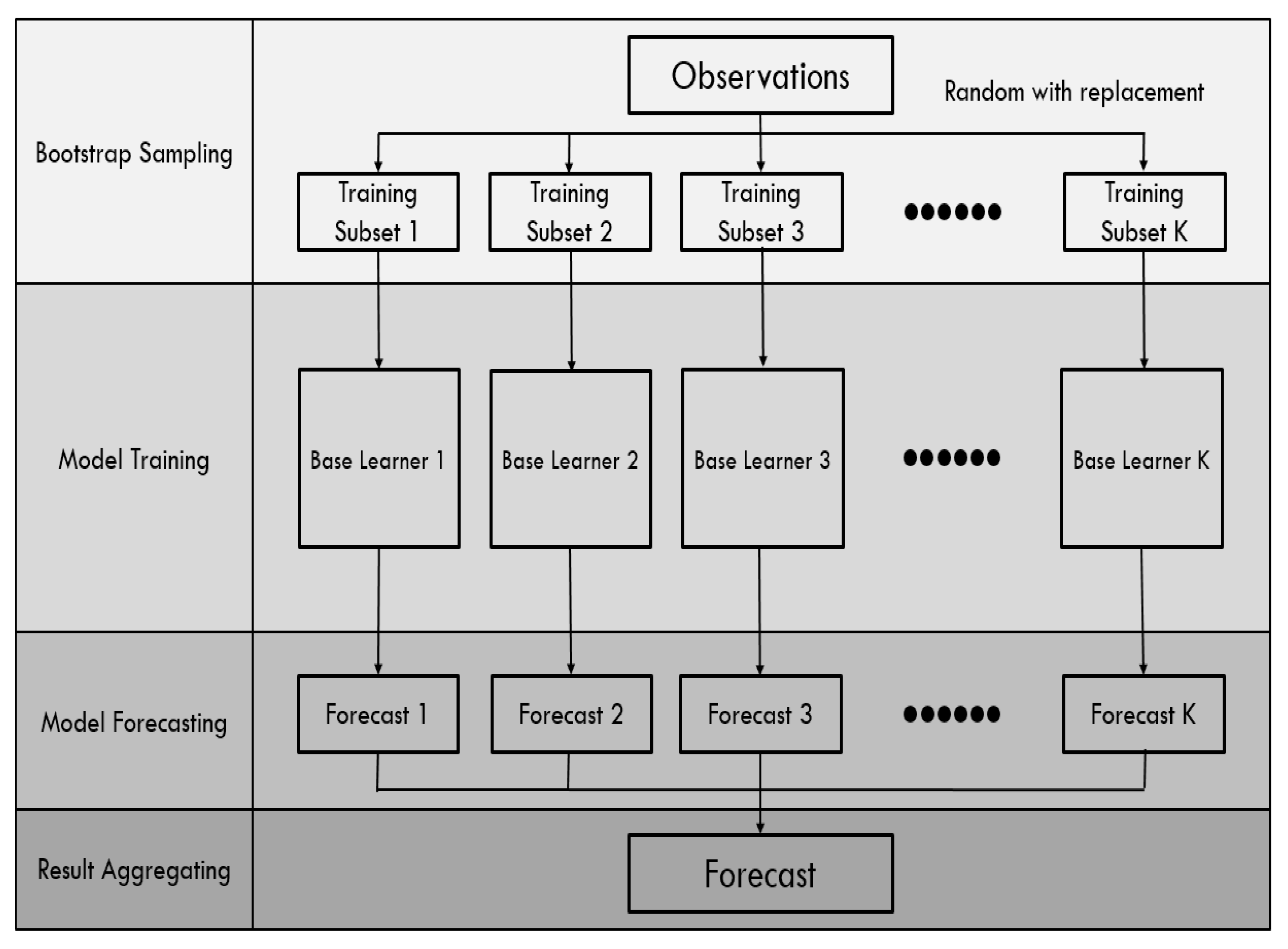

Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. In bagging predictors are constructed using bootstrap samples from the training set and then aggregated to form a bagged predictor.

5 Easy Questions On Ensemble Modeling Everyone Should Know

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees.

. Let me briefly define variance and overfitting. The vital element is the instability of the prediction method. This sampling and training process is represented below.

Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. Lets assume we have a sample dataset of 1000. Each bootstrap sample leaves out about 37 of the.

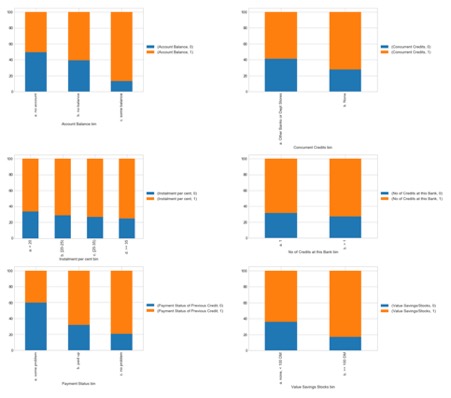

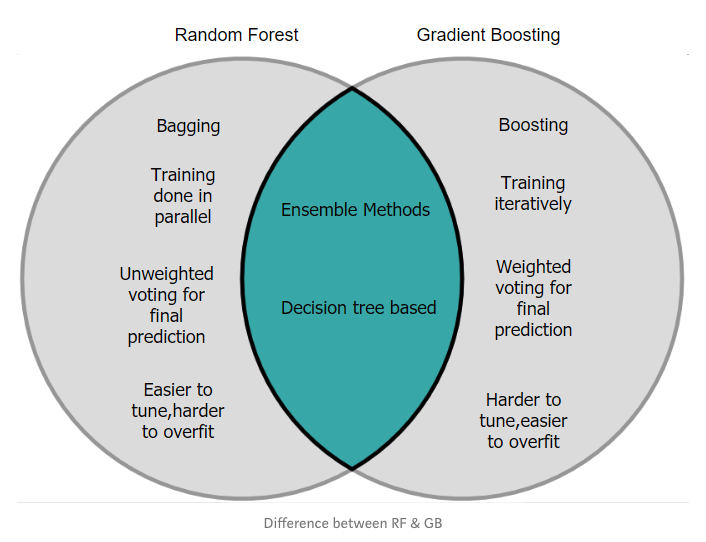

The bagging aims to reduce variance and overfitting models in machine learning. Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data. In other words both bagging and pasting allow training instances to be sampled several times across multiple predictors but only bagging allows training instances to be sampled several times for the same predictor.

Date Abstract Evolutionary learning techniques are comparable in accuracy with other learning. When sampling is performed without replacement it is called pasting. Published 1 August 1996 Computer Science Machine Learning Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

Customer churn prediction was carried out using AdaBoost classification and BP neural. Manufactured in The Netherlands. Bootstrap Aggregation bagging is a ensembling method that.

Important customer groups can also be determined based on customer behavior and temporal data. Statistics Department University of. By use of numerical prediction the mean square error of the.

The change in the models prediction. Computer Science Machine Learning Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Improving the scalability of rule-based evolutionary learning Received.

Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Bagging is effective in reducing the prediction errors when the single predictor ψ x L is highly variable. In bagging a random sample.

Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

Ml Bagging Classifier Geeksforgeeks

Ensemble Methods In Machine Learning

Chapter 10 Bagging Hands On Machine Learning With R

Learning Ensemble Bagging And Boosting Video Analysis Digital Vidya

Ensemble Methods In Machine Learning Bagging Subagging

Guide To Ensemble Methods Bagging Vs Boosting

Random Forest Introduction To Random Forest Algorithm

A Primer To Ensemble Learning Bagging And Boosting

Boosting Overview Forms Pros And Cons Option Trees

Two Stage Bagging Pruning For Reducing The Ensemble Size And Improving The Classification Performance

Super Learner Machine Learning Algorithms For Compressive Strength Prediction Of High Performance Concrete Lee Structural Concrete Wiley Online Library

Bootstrap Aggregating Wikiwand

Pdf Applying A Bagging Ensemble Machine Learning Approach To Predict Functional Outcome Of Schizophrenia With Clinical Symptoms And Cognitive Functions

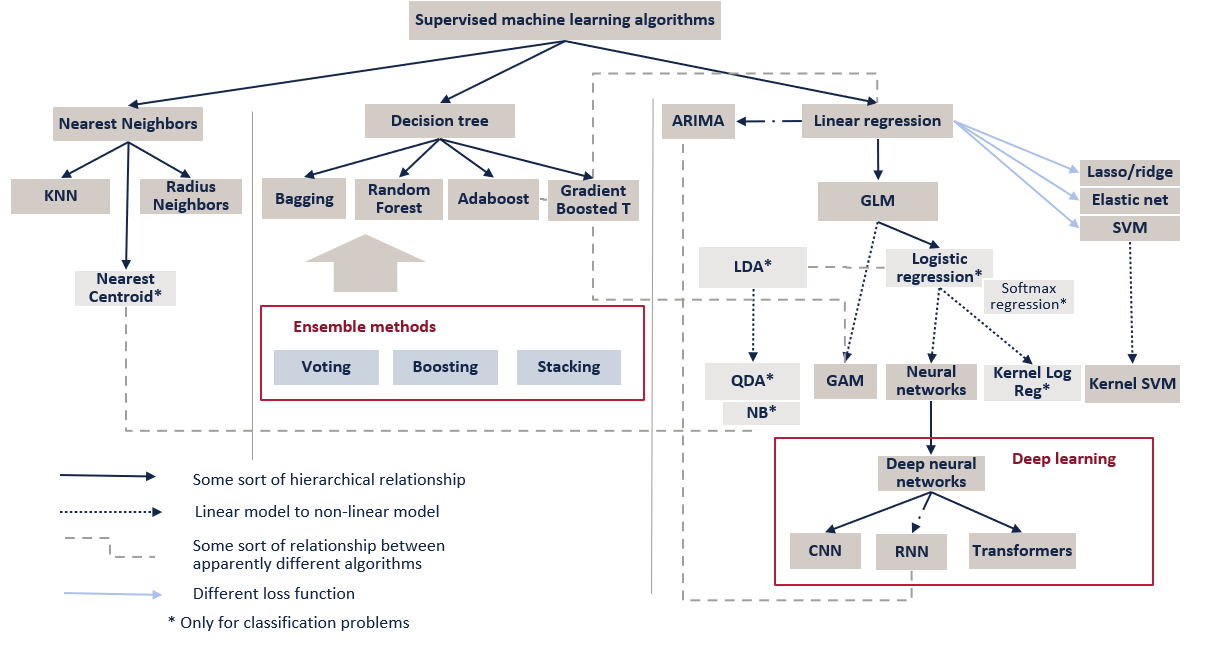

Overview Of Supervised Machine Learning Algorithms By Angela Shi Towards Data Science

Energies Free Full Text An Ensemble Learner Based Bagging Model Using Past Output Data For Photovoltaic Forecasting Html

An Empirical Study Of Bagging Predictors For Different Learning Algorithms Semantic Scholar

Supervised Machine Learning Classification A Guide Built In

An Empirical Study Of Bagging Predictors For Different Learning Algorithms Semantic Scholar